video

Deep Learning Course - Level: Intermediate

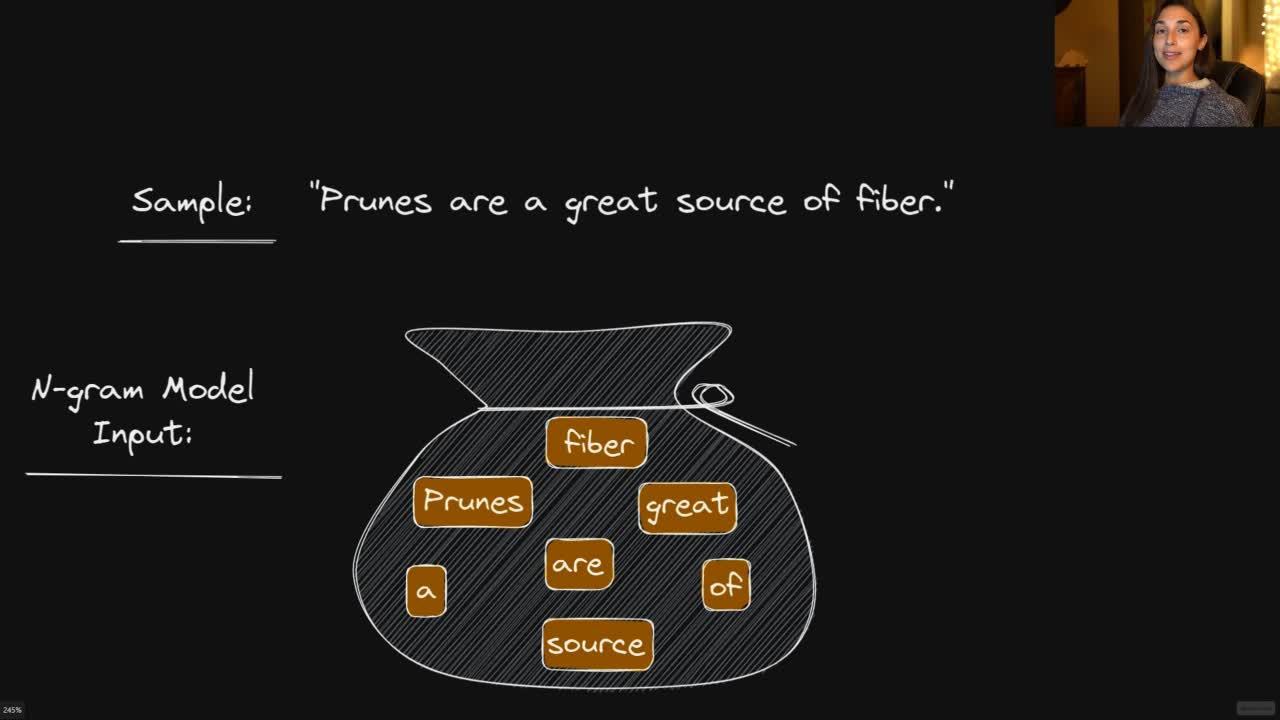

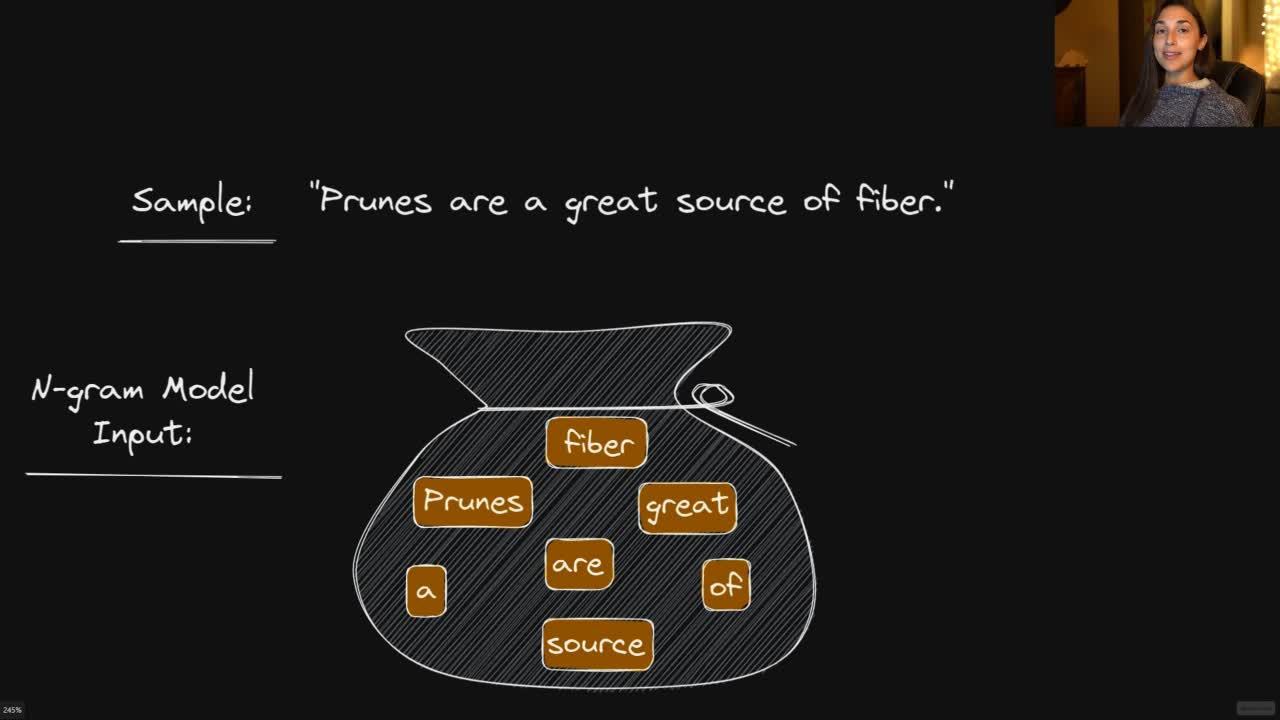

As we've now been introduced, N-gram models, also known as bag-of-words models, do not make use of word order.

An N-gram model gets its name from the fact that it accepts n-grams as inputs. N-grams are made up of individual tokens, as well as tokens that are located next to each other in any given sample in the dataset.

Committed by on