Backpropagation explained | Part 2 - The mathematical notation

text

Backpropagation mathematical notation

Hey, what's going on everyone? In this post, we're going to get started with the math that's used in backpropagation during the training of an artificial neural network. Without further ado, let's get to it.

In our last post on backpropagation, we covered the intuition behind what backpropagation's role is during the training of an artificial neural network. Now, we're going to focus on the math that's underlying backprop.

Recapping backpropagation

Let's recap how backpropagation fits into the training process.

We know that after we forward propagate our data through our network, the network gives an output for that data. The loss is then calculated for that predicted output based on what the true value of the original data is.

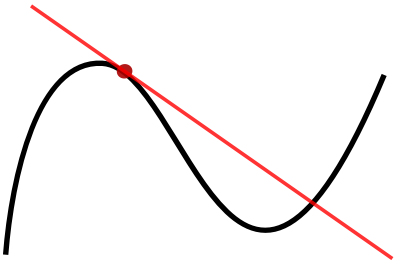

Stochastic gradient descent, or SGD, has the objective to minimize this loss. To do this, it calculates the derivative of the loss with respect to each of the weights in the network. It then uses this derivative to update the weights.

It does this process over and over again until it's found a minimized loss. We covered how this update is actually done using the learning rate in our previous post that covers how a neural network learns .

When SGD calculates the derivative, it's doing this using backpropagation. Essentially, SGD is using backprop as a tool to calculate the derivative, or the gradient, of the loss function.

Going forward, this is going to be our focus. All the math that we'll be covering in the next few posts will be for the sole purpose of seeing how backpropagation calculates the gradient of the loss function with respect to the weights.

Ok, we've now got our refresher of backprop out of the way, so let's jump over to the math!

Backpropagation mathematical notation

As discussed, we're going to start out by going over the definitions and notation that we'll be using going forward to do our calculations.

This table describes the notation we'll be using throughout this process.

| Symbol | Definition |

|---|---|

| \(L\) | Number of layers in the network |

| \(l\) | Layer index |

| \(j\) | Node index for layer \(l\) |

| \(k\) | Node index for layer \(l-1\) |

| \(y_{j}\) | The value of node \(j\) in the output layer \(L\) for a single training sample |

| \(C_{0}\) | Loss function of the network for a single training sample |

| \(w_{j}^{(l)}\) | The vector of weights connecting all nodes in layer \(l-1\) to node \(j\) in layer \(l\) |

| \(w_{jk}^{(l)}\) | The weight that connects node \(k\) in layer \(l-1\) to node \(j\) in layer \(l\) |

| \(z_{j}^{(l)}\) | The input for node \(j\) in layer \(l\) |

| \(g^{(l)}\) | The activation function used for layer \(l\) |

| \(a_{j}^{(l)}\) | The activation output of node \(j\) in layer \(l\) |

Let's narrow in and discuss the indices used in these definitions a bit further.

Importance of indices

Recall at the top of the table, we covered the notation that we'd be using to index the layers and nodes within our network. All further definitions then depended on these indices.

| Symbol | Definition |

|---|---|

| \(l\) | Layer index |

| \(j\) | Node index for layer \(l\) |

| \(k\) | Node index for layer \(l-1\) |

We saw that, for each of the terms we introduced, we have either a subscript or a superscript, or both. Sometimes, our subscript even had two terms, as we saw when we defined the weight between two nodes.

These indices we're using everywhere may make the terms look a little intimidating and overly bulky. That's why I want to focus on this topic further here.

It turns out that if we use these indices properly and we understand their purpose, it's going to make our lives a lot easier going forward when working with these terms and will reduce any ambiguity or confusion, rather than induce it.

In code, when we run loops, like a for loop or a while loop that, the data that the loop is iterating over is an indexed sequence of data.

// pseudocode (java)

for (int i = 0; i < data.length; i++) {

#do stuff

}

Indexed data allows the code to understand where to start, where to end, and where it is, at any given point in time, within the loop itself.

This idea of keeping track of where we are during an iteration over a sequence is precisely why keeping track of which layer, which node, which weight, or really, which anything that we introduced here, is important.

In the math in the upcoming post, we'll be seeing a lot of iteration, particularly via summation, where summation is simply the addition of a sequence of numbers. A summation is just the process of iterating over a sequence of values and summing them.

Math example:

Suppose that \((a_{n})\) is a sequence of numbers. The sum of this sequence is given by:

Code example:

Suppose that a = [1,2,3,4] is a sequence of numbers. The sum is given by:

int sum = 0;

while (j < a.length) {

sum = sum + a[j];

}

Aside from iteration, any time we choose a specific item to work with, like a particular layer, node, or weight, the indexing that we introduced here is what will allow us to properly reference this particular item that we've chosen to focus on.

As it turns out, backpropagation itself is an iterative process, iterating backwards through each layer, calculating the derivative of the loss function with respect to each weight for each layer.

Given this, it should be clear why these indices are required in order to make sense of the math going forward. Hopefully, rather than causing confusion within our notation, these indices can instead become intuition for when we think about doing anything iterative over our network.

Wrapping up

Alright, now we have all the mathematical notation and definitions we need for backprop going forward. At this point, take the time to make sure that you fully understand this notation and the definitions, and that you're comfortable with the indexing that we talked about. After you have this down, you'll be prepared to take the next step.

In our next post, we'll be using these definitions to make some mathematical observations regarding things that we already know about the training process.

These observations are going to be needed in order to progress to the relatively heavier math that comes into play when we start differentiating the loss function in order to calculate the gradient using backprop. See ya next time!

quiz

resources

updates

Committed by on

DEEPLIZARD

Message

DEEPLIZARD

Message