Backpropagation explained | Part 5 - What puts the "back" in backprop?

text

Backpropagation | Part 5 - What puts the "back" in backprop?

Hey, what's going on everyone? In this episode, we'll see the math that explains how backpropagation works backwards through a neural network.

Without further ado, let's get to it.

Setting Things Up

Alright, we've seen how to calculate the gradient of the loss function using backpropagation in the previous episodes. We haven't yet seen though where the backwards movement comes into play that we talked about when we discussed the intuition for backprop.

Now, we're going to build on the knowledge that we've already developed to understand what exactly puts the back in backpropagation.

The explanation we'll give for this will be math-based, so we're first going to start out by exploring the motivation needed for us to understand the calculations we'll be working through.

We'll then jump right into the calculations, which we'll see, are actually quite similar to ones we've worked through in the previous episode.

After we've got the math down, we'll then bring everything together to achieve the mind-blowing realization for how these calculations are mathematically done in a backwards fashion.

Alright, let's begin.

Motivation

We left off from our last episode by seeing how we can calculate the gradient of the loss function with respect to any weight in the network. When we went through the process for showing how that was calculated, recall that, we worked with this single weight in the output layer of the network.

Then, we generalized the result we obtained by saying this same process could be applied for all the other weights in the network.

For this particular weight, we saw that the derivative of the loss with respect to this weight was equal to this

Now, what would happen if we chose to work with a weight that is not in the output layer, like this weight here?

Well, using the formula we obtained for calculating the gradient of the loss, we see that the gradient of the loss with respect to this particular weight is equal to this

Alright, check it out. This equation looks just like the equation we used for the previous weight we were working with.

The only difference is that the superscripts are different because now we're working with a weight in the third layer, which we're denoting as \(L-1\), and then the subscripts are different as well because we're working with the weight that connects the second node in the second layer to the second node in the third layer.

Given this is the same formula, then we should just be able to calculate it in the exact same way we did for the previous weight we worked with in the last episode, right?

Well, not so fast.

So yes, this is the same formula, and in fact, the second and third terms on the right hand side will be calculated using the same exact approach as we used before.

The first term on the right hand side of the equation is the derivative of the loss with respect to this one activation output, and for this one, there's actually a different approach required for us to calculate it.

Let's think about why.

When we calculated the derivative of the loss with respect to a weight in the output layer, we saw that the first term is the derivative of the loss with respect to the activation output for a node in the output layer.

Well, as we've talked about before, the loss is a direct function of the activation output of all the nodes in the output layer. You know, because the loss is the sum of the squared errors between the actual labels of the data and the activation output of the nodes in the output layer.

Ok, so, now when we calculate the derivative of the loss with respect a weight in layer \(L-1\), for example, the first term is the derivative of the loss with respect to the activation output for node two, not in the output layer, \(L\), but in layer \(L-1\).

And, unlike the activation output for the nodes in the output layer, the loss is not a direct function of this output.

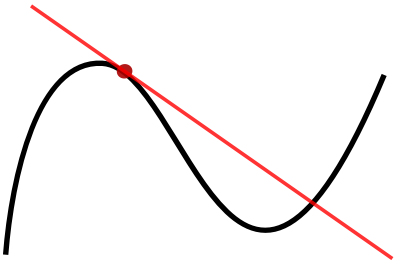

See, because consider where this activation output is within the network, and then consider where the loss is calculated at the end of the network. We can see that the output is not being passed directly to the loss.

What we need now is to understand how to calculate this first term then. That's going to be our focus here.

If needed, go back and watch the previous episode where we calculated the first term in the first equation to see the approach we took.

Then, use that information to compare with the approach we're going to use to calculate the first term in second equation.

| Which | Equation |

|---|---|

| First |

\[ \frac{\partial C_{0}}{\partial w_{12}^{(L)}} = \left(\frac{\partial C_{0}}{\partial a_{1}^{(L)}} \right) \left(\frac{\partial a_{1}^{(L)}}{\partial z_{1}^{(L)}} \right) \left(\frac{\partial z_{1}^{(L)}}{\partial w_{12}^{(L)}}\right) \]

|

| Second |

\[ \frac{\partial C_{0}}{\partial w_{22}^{(L-1)}} = \left( \frac{\partial C_{0}}{\partial a_{2}^{(L-1)}}\right) \left( \frac{\partial a_{2}^{(L-1)}}{\partial z_{2}^{(L-1)}}\right) \left( \frac{\partial z_{2}^{(L-1)}}{\partial w_{22}^{(L-1)}}\right) \]

|

Now, because the second and third terms on the right hand side of the second equation are calculated in the exact same manner as we've seen before, we're not going to cover those here.

We're just going to focus on how to calculate the first term on the right hand side of the second equation, and then we'll combine the results from all terms to see the final result.

Alright, at this point, go ahead and admit, you're thinking to yourself:

I hear you. We're getting there, so stick with me. We have to go through the math first and see what it's doing, and then once we see that, we'll be able to clearly see the whole point of the backwards movement.

So let's go ahead and jump in to the calculations.

Calculations

Alright, time to get set up.

We're going to show how we can calculate the derivative of the loss function with respect to the activation output for any node that is not in the output layer. We're going to work with a single activation output to illustrate this.

Particularly, we'll be working with the activation output for node \(2\) in layer \(L-1\).

This is denoted as

and the partial derivative of the loss with respect to this activation output is denoted as this

Observe that, for each node \(j\) in \(L\), the loss \(C_{0}\) depends on on \(a_{j}^{\left( L\right) }\), and \(a_{j}^{\left(L\right) }\) depends on \(z_{j}^{(L)}\). The node \(z_{j}^{(L)}\) depends on all of the weights connected to node \(j\) from the previous layer, \(L-1\), as well as all the activation outputs from \(L-1\).

This means that the node, \(z_{j}^{\left( L\right) }\) depends on \(a_{2}^{(L-1)}\).

Ok, now the activation output for each of these nodes depends on the input to each of these nodes.

In turn, the input to each of these nodes depends on the weights connected to each of these nodes from the previous layer, \(L-1\), as well as the activation outputs from the previous layer.

Given this, we can see how the input to each node in the output layer is dependent on the activation output that we've chosen to work with, the activation output for node \(2\) in layer \(L-1\).

Using similar logic to what we used in the previous episode, we can see from these dependencies that the loss function is actually a composition of functions, and so, to calculate the derivative of the loss with respect to the activation output we're working with, we'll need to use the chain rule.

The chain rule tells us that to differentiate \(C_{0}\) with respect to \(a_{2}^{(L-1)}\), we take the product of the derivatives of the composed function. This derivative can be expressed as

This tells us that the derivative of the loss with respect to the activation output for node \(2\) in layer \(L-1\) is equal to the expression on the right hand side of the above equation.

This is the sum for each node \(j\) in the output layer, \(L\), of the derivative of the loss with respect to the activation output for node \(j\), times the derivative of the activation output for node \(j\) with respect to the input for node \(j\), times the input for node \(j\) with respect to the activation output for node \(2\) in layer \(L-1\).

Now, actually, this equation looks almost identical to the equation we obtained in the last episode for the derivative of the loss with respect to a given weight. Recall that this previous derivative with respect to a given weight that we worked with was expressed as

Just eye-balling the general likeness between these two equations, we see that the only differences are one, the presence of the summation operation in our new equation, and two, the last term on the right hand side differs.

The reason for the summation is due to the fact that a change in one activation output in the previous layer is going to affect the input for each node \(j\) in the following layer \(L\), so we need to sum up these effects.

Now, we can see that the first and second terms on the right hand side of the equation are the same as the first and second terms in the last equation with regards to \(w_{12}^{\left( L\right) }\) in the output layer when \(j=1\).

Since we've already gone through the work to find how to calculate these two derivatives in the last episode, we won't do it again here.

We're only going to focus on breaking down the third term, and then we'll combine all terms to see the final result.

The Third Term

Alright, so let's jump in to how to calculate the third term from the equation we just looked at.

The third term is the derivative of the input to any node \(j\) in the output layer \(L\) with respect to the activation output for node \(2\) in layer \(L-1\).

We know for each node \(j\) in layer \(L\) that

Therefore, we can substitute this expression in for \(z_{j}^{(L)}\) in our derivative.

Expanding the sum, we have

Due to the linearity of the summation operation, we can pull the derivative operator through to each term since the derivative of a sum is equal to the sum of the derivatives.

This means we're taking the derivatives of each of these terms with respect to \(a_{2}^{(L-1)}\), but actually we can see that only one of these terms contain \(a_{2}^{(L-1)}\).

This means that when we take the derivative of the other terms that don't contain \(a_{2}^{(L-1)}\), these terms will evaluate to zero.

Now taking the derivative of this one term that does contain \(a_{2}^{(L-1)}\), we apply the power rule, to obtain the result.

This result says that the input for any node \(j\) in layer \(L\) will respond to a change in the activation output for node \(2\) in layer \(L-1\) by an amount equal to the weight connecting node \(2\) in layer \(L-1\) to node \(j\) in layer \(L\).

Alright, let's now take this result and combine it with our other terms to see what we get as the total result for the derivative of the loss with respect to this activation output.

Combining the Terms

Alright, so we have our original equation here for the derivative of the loss with respect to the activation output we've chosen to work with.

From the previous episode, we already know what these first two terms evaluate to. So I've gone ahead and plugged in those results, and since we have just seen what the result of the third term is, we plug it in as well.

Ok, so we've got this full result. Now what was it that we wanted to do with it again?

Oh yeah, now we can use this result to calculate the gradient of the loss with respect to any weight connected to node \(2\) in layer \(L-1\), like we saw for \(w_{22}^{(L-1)}\), for example, with the following equation

The result we just obtained for the derivative of the loss with respect to the activation output for node \(2\) in layer \(L-1\) can then be substituted for the first term in this equation.

As mentioned earlier, the second and third terms are calculated using the exact same approach we took for those terms in the previous episode.

Notice that we've used the chain rule twice now. With one of those times being nested inside the other. We first used the chain rule to obtain the result for this entire derivative for the loss with respect to the given weight.

Then, we used it again to calculate the first term within this derivative, which itself was the derivative of the loss with respect to this activation output.

The results from each of these derivatives using the chain rule depended on derivatives with respect to components that reside later in the network.

Essentially, we're needing to calculate derivatives that depend on components later in the network first and then use these derivatives in our calculations of the gradient of the loss with respect to weights that come earlier in the network.

We achieve this by repeatedly applying the chain rule in a backwards fashion.

Average Derivative of the Loss Function

Note, to find the derivative of the loss function with respect to this same particular activation output, \(a_{2}^{(L-1)}\), for all \(n\) training samples, we calculate the average derivative of the loss function over all \(n\) training samples. This can be expressed as

Concluding Thoughts

Whew! Alright, now we know what puts the back in backprop.

After finishing this with this episode, along with the earlier episodes on backprop that precede this one, we should now have a full understanding for what backprop is all about.

quiz

resources

updates

Committed by on

DEEPLIZARD

Message

DEEPLIZARD

Message