video

Deep Learning Course - Level: Beginner

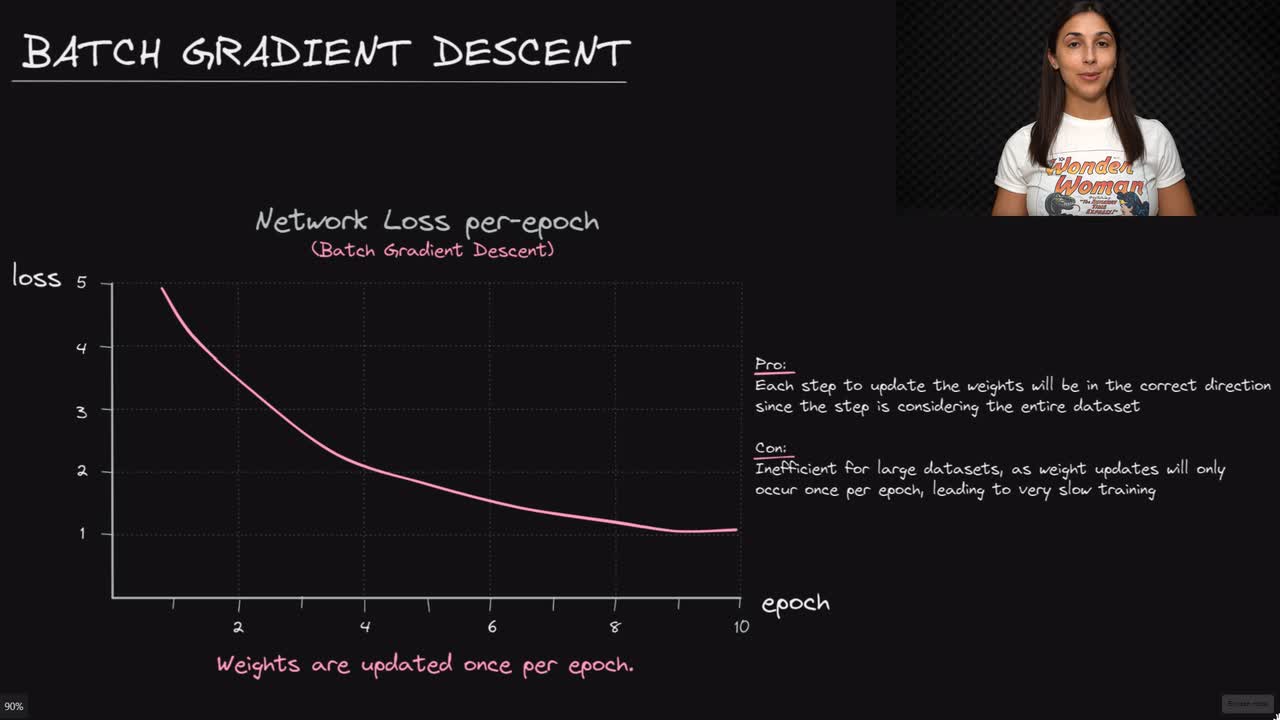

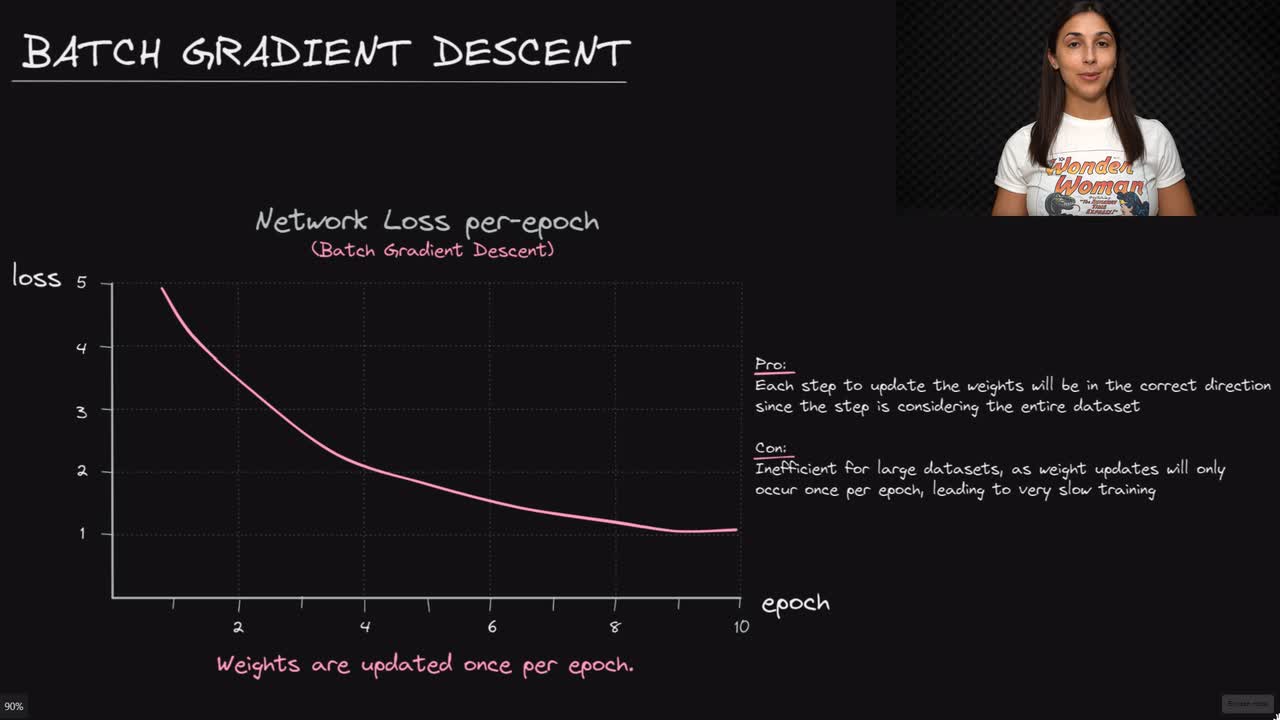

When we create a neural network, each weight between nodes is initialized with a random value. During training, these weights are iteratively updated via an optimization algorithm and moved towards their optimal values that will lead to the network's lowest loss.

After completing a forward pass through the network, a gradient descent optimizer calculates the gradients of the loss with respect to each weight in the network, and updates the weights with their corresponding gradients.

Committed by on