video

Deep Learning Course - Level: Beginner

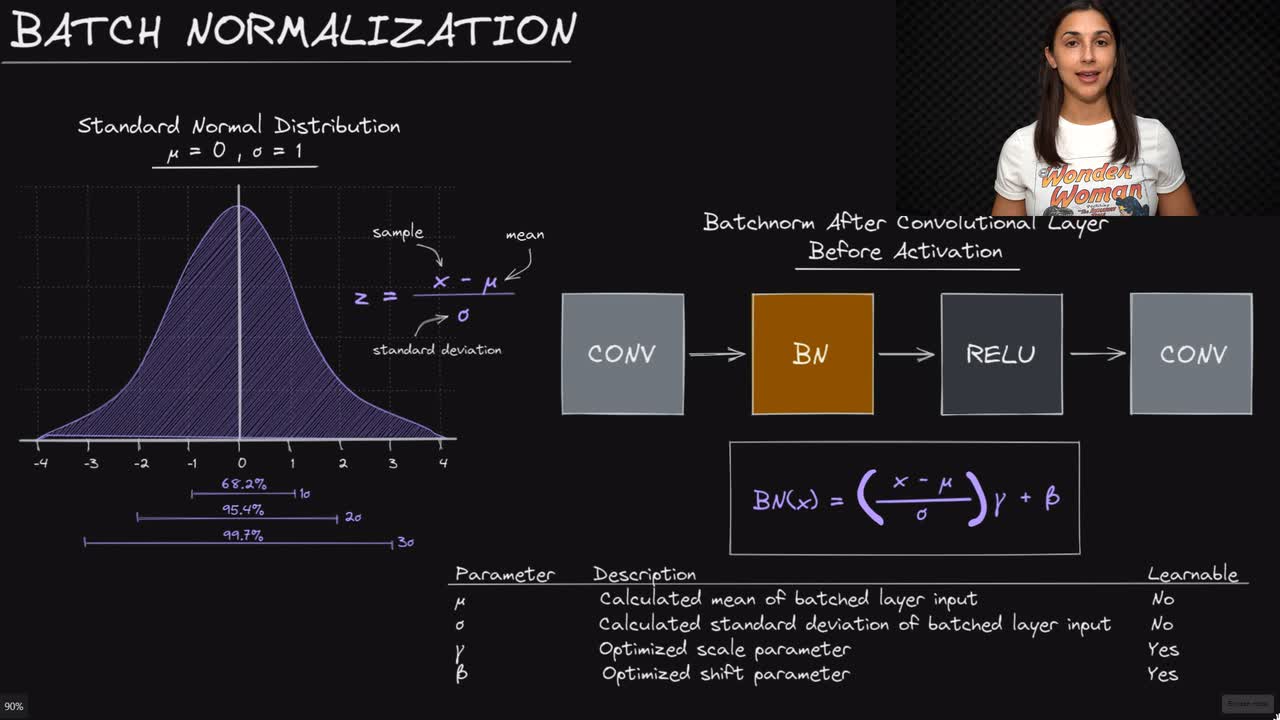

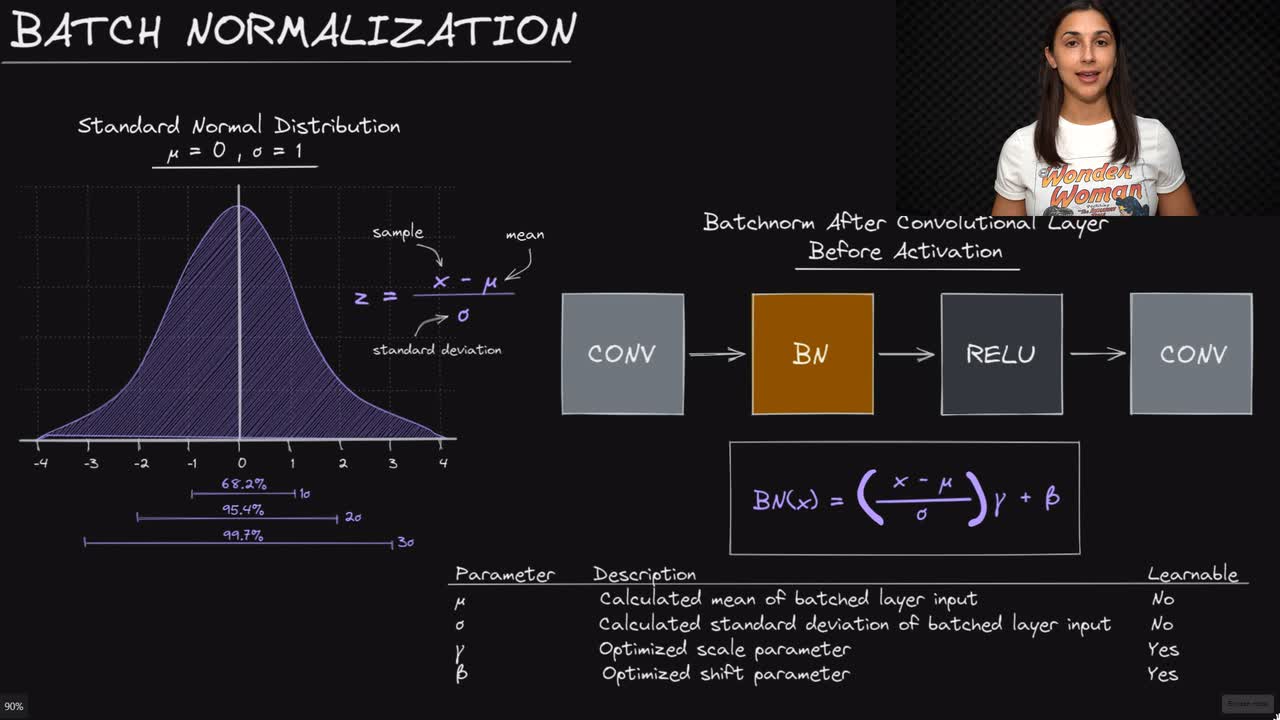

Prior to training a neural network, we typically normalize our dataset in some way ahead of time as part of the data pre-processing step where we prepare the data for training.

In non-normalized datasets, the larger data points can cause instability in neural networks. These relatively large inputs can cascade through the layers in the network, which may cause imbalanced gradients during the training process, potentially leading to the exploding gradient problem.

Committed by on