video

Deep Learning Course - Level: Beginner

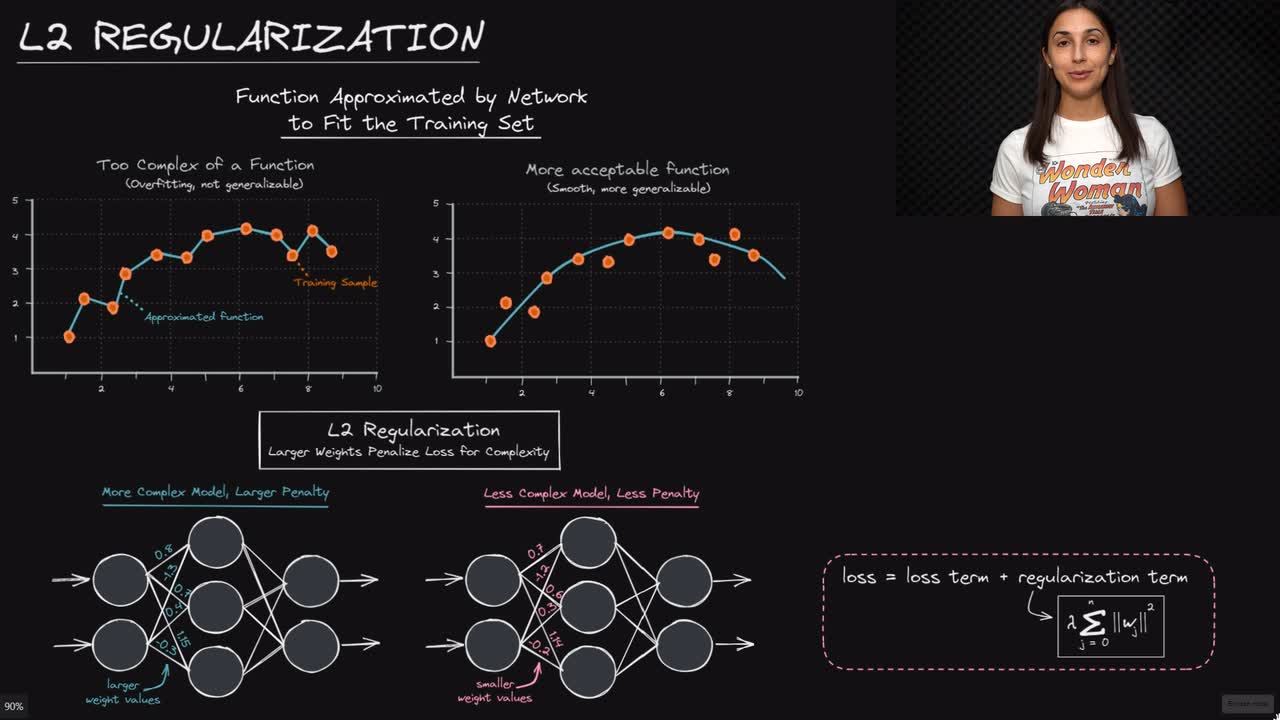

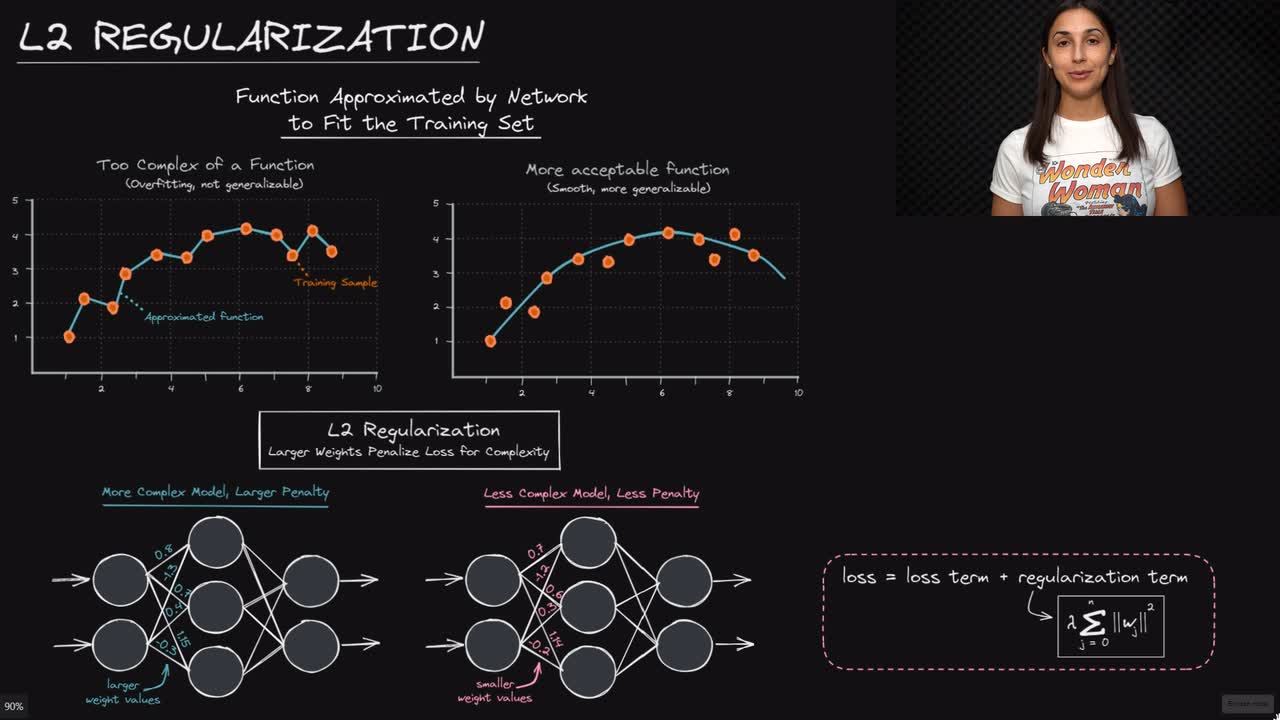

Generally, regularization is any technique used to modify the model, or the learning algorithm in general, in attempts to increase its ability to generalize better without the expense of increasing the training loss.

L2 regularization is a specific regularization technique that helps to reduce overfitting in the network by penalizing for complexity. The idea is that certain complexities in the model, which allow it to fit the training set snugly, may make it unlikely to generalize well.

Committed by on